This blog post is based on a recent article “Communication Evaluation and Measurement: Connecting Research to Practice” by Dr. Alexander Buhmann, Fraser Likely and Dr. David Geddes of the Task Force on Standardization of Communication Planning/Objective Setting and Evaluation/Measurement Models. For the full article, visit the Journal of Communication Management (Vol. 22 No. 1, 2018 pp. 1-7).

Much has been written about the history of the decades long evolution of evaluation and measurement (E&M) thinking and practices in public relations and strategic communication.[1] While taking that history into consideration, the purpose of our January 2018 paper was to explore more recent developments and opine on the current state of evaluation and measurement.

We examined developments over the last decade, from 2008 to today. In particular, we looked through the lens of the E&M interest community. Different from other subject matter areas in public relations or strategic communication, one can see a fairly well-defined community of practice for evaluation and measurement. That community comprises both academics and professionals, with overlapping involvement in such groups as the IPR Measurement Commission, the Association of Measurement and Evaluation in Communications (AMEC), the Task Force on Standardization of Communication Planning/Objective Setting and Evaluation/Measurement Models, the German Public Relations Society (DPRG) and the International Controller Association’s (ICV) Value Creation Through Communication Task Force, and the Coalition for Public Relations Research Standards.

Notable developments in this most recent period include the movement to ban the use of the concept of Advertising Value Equivalency (AVEs) and the inappropriately used term Return on Investment (ROI), the AMEC community-sourced, original Barcelona Principles in 2010 and the revised version in 2015, the DPRG & ICV’s Communication Controlling model, AMEC’s Integrated Evaluation Framework, AMEC’s International Certificate in Measurement and Evaluation, the Public Relations Research Standards Center, the United Kingdom’s Government Communication Services’ GCS Evaluation Framework and the Public Relations Institute of Australia’s (PRIA) Measurement and Evaluation Framework.

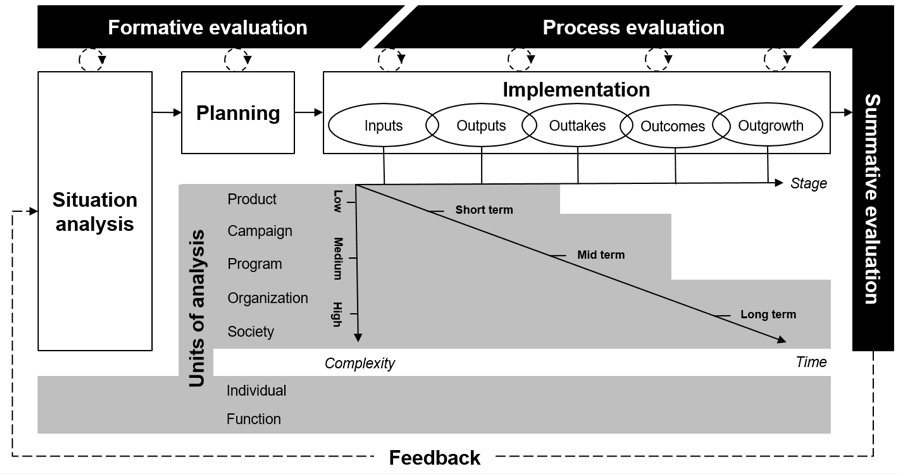

Figure: Dimensions of evaluation in strategic communication: a holistic framework (from Buhmann & Likely, 2018, “Evaluation and Measurement” in The International Encyclopedia of Strategic Communication)

With these developments, we concluded that Jim Grunig’s famous ‘cri de coeur’ (so labeled by Tom Watson) had been heard – at least by the E&M interest community. Years ago, Jim said that “I feel more and more like a fundamentalist minister railing against sin; the difference being that I have railed for evaluation in public relations practice. Just as everyone is against sin, so most public relations people I talk to are for evaluation. People keep on sinning […] and PR people continue not to do evaluation research.” The recent developments have moved this community of practice upwards on Jim’s Levels of Analysis ladder, from focusing primarily on the evaluation and measurement of communication products and activities, communication channels and media and communication messages (i.e. mostly inputs and outputs) to focusing on the evaluation and measurement of communication projects and campaigns and their tangible impact on the organization (i.e. outcomes and outgrowth).

Based on the most recent developments in research and practice, the overall evaluation and measurement process can be structured along five fundamental dimensions (see Figure):

- The basic planning/evaluation cycle that is at the core of any evaluation and constitutes its inherent link with situational analysis, planning, and implementation. Particularly when the overall strategic communication process is viewed as a process of rational decision making, evaluation becomes visible as an inherent element of this basic cycle

- The three general and sometimes overlapping types of evaluation (formative, process, summative) ordered alongside this basic cycle

- The various possible units of analysis that can constitute the object of evaluation efforts across a variety of organizational subsidiaries, projects, and activities ranging from low to high complexity, i.e.: product, project, program, organization, society, individual practitioner, or function);

- The different stages of evaluation during implementation, chosen to structure selected evaluation milestones, measures, and methods (such as input, output, outtakes, outcomes, and outgrowth/impact)

- The feedback provided by evaluations, which can take two possible forms: a) reporting and performance review or, more generally; b) strategic insights and learning.

Throughout the intense debate on evaluation of strategic communication, all models and frameworks have focused on some though never all of these dimensions. While the interest community has moved aggressively towards a more holistic understanding of evaluation and more sophisticated measures, surveys suggest that, in the wider profession, the commonly found barriers (lack of: time; budget; organizational performance culture and thus management demand and support; advanced E&M knowledge and competencies; access to more sophisticated research methodologies or tools; common industry models and standards; and/or communication department involvement in organizational strategic management) remain, in whole or in part.

In attempting to extrapolate these findings over the coming decade, we argued that the E&M interest community still has a way to go – before it can provide the average communication department practice a leg over these common barriers. We made six suggestions.

- First, the one key area for successfully connecting the specialty discourse of the E&M interest community to the actual mainstream of practice is that of standards, and here more work needs to be done.

- Second, most of the progress in the M&E debate has been on assessing whether communication campaigns have been effective, but a sole effectiveness-based view limits important M&E capacities for insights and learning.

- Third, there is a widespread neglect of the evaluation of internal services provided by communication departments, such as intelligence gathering, cross-organizational data aggregation and insight analysis, strategizing and planning, education and training, coaching, and counsel.

- Fourth, many evaluation frameworks downplay the role of intervening variables. The frameworks investigate cause-and-effect relationships, but there is little effort to widen this view to include additional variables from the social context in which a message or program is embedded.

- Fifth, different fields have all developed their own evaluation models, methods, and measures. Closer ties between related fields can help both academia and practice overcome the lack of diversity and interdisciplinary cooperation.

- Sixth, the E&M interest community needs to become even more active in disseminating its work into the wider practice, because there is still a clear disconnect between engaged groups of academics, agencies, consultants and client organizations on the one hand and much of the profession on the other.

All that said, the last decade saw more consensus within the greater E&M community of practice than all the previous three decades beforehand. This E&M interest community is unique within the field: the more it can work together with shared purpose, the faster the profession will overcome those barriers and be able to provide the PR/Strategic Communication function with a more comprehensive evaluation and measurement framework, based on seven separate units of evaluation analysis for performance measurement: communication product/channel/message; communication project/campaign; stakeholder programs; the organizational impact; societal impact; individual employee performance; and communication department performance.

Fraser Likely, Fraser Likely PR/Communication Performance, IPR Measurement Commission, AMEC, likely@intranet.ca

Dr. Alexander Buhmann, BI Norwegian Business School, alexander.buhmann@bi.no

Dr. David Geddes | Geddes Analytics LLC | Westmeath Global Communications Advisor | IPR Measurement Commission | david.geddes@geddesanalytics.com

The authors would like to thank the other members of the International Task Force on Standardization of Communication Planning/Objective Setting and Evaluation/Measurement Models (namely: Forrest Anderson, Dr. Mark-Steffen Buchele, Dr. Nathan Gilkerson, Dr. Jim Macnamara, Tim Marklein, Dr. Rebecca Swenson, Sophia Charlotte Volk, and Michael Ziviani) for their constructive feedback on the original version of the article published in the Journal of Communication Management.

References

[1] For an excellent history of E&M academic research, see Sophia Volk https://www.sciencedirect.com/science/article/pii/S0363811116300765

For a detailed background on the evolution of academic and practitioner E&M thinking and practice, see

Walt Lindenmann https://instituteforpr.org/wpcontent/uploads/PR_History2005.pdf

Tom Watson and Paul Noble (Evaluating Public Relations: A Best Practice Guide to Public Relations Planning, Research and Evaluation, 2007; 2014)

Don Stacks and David Michaelson (A Practitioner’s Guide to Public Relations Research, Measurement and Evaluation, 2010; 2014)

Fraser Likely and Tom Watson https://instituteforpr.org/wp-content/uploads/LikelyWatson-Book-Chapter-Measuring-the-Edifice.pdf

Jim Macnamara and Fraser Likely https://instituteforpr.org/wp-content/uploads/Revisiting-the-Disciplinary-Home-of-Evaluation-New-Perspectives-to-Inform-PR-Evaluation-Standards.pdf

Jim Macnamara (Evaluating Public Communication: Exploring New Models, Standards, and Best Practice, 2017) and Alexander Buhmann and Fraser Likely (“Evaluation and Measurement” in The International Encyclopedia of Strategic Communication, in press).