This blog post is provided by the IPR Measurement Commission in celebration of Measurement Month in November

There have been major advances in measurement and evaluation (M&E) in terms of the technology and tools available, which now include no-cost web analytics tools such as Google Analytics (basic version) and low-cost applications such as Hootsuite for social media analysis. Such tools dispense with the frequent claim that lack of budget prevents M&E.

However, a problem identified as early as 1985 continues to be a contributor to what some researchers identify as “stasis” or a “deadlock” in M&E. A leading PR textbook pointed out in its 1985 edition that “the common error in program evaluation is substituting measures from one level for those at another level.”[1] This warning was repeated in subsequent editions and was also pointed out by eminent PR scholar Jim Grunig, who defined ‘substitution error’ as use of “a metric gathered at one level of analysis to show an outcome at a higher level of analysis.”[2]

Grunig should have said ‘claim’ an outcome, because low-level metrics such as counts of media messages and share of voice, do not “show” an outcome. Substitution of such metrics for evidence of outcomes and impact results in half-way evaluation – that is, it shows that communication is, at best, half way to achieving outcomes and impact.

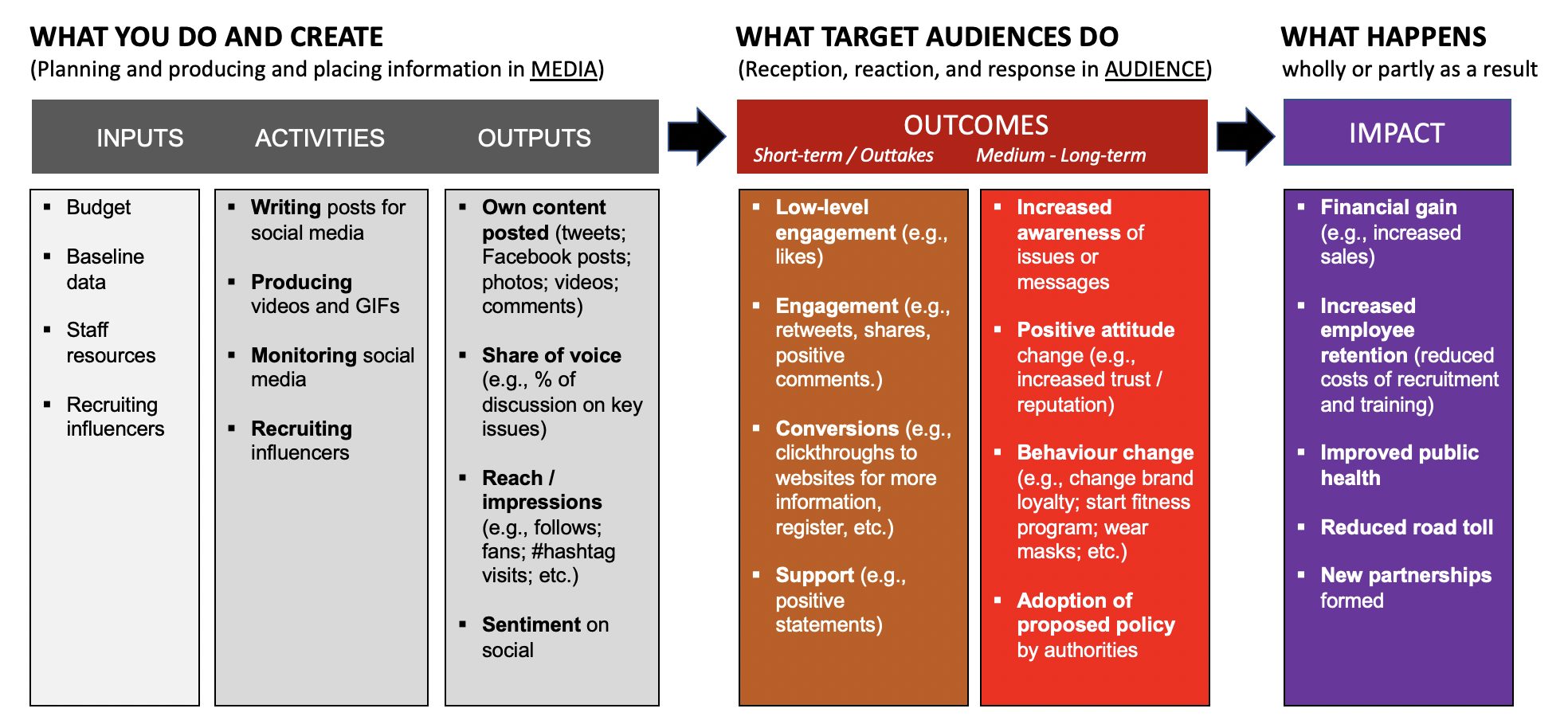

Continuing occurrences of substitution error are possibly the result of ineffective communication on the part of educators and M&E advocates. So here, a visual illustration is used to support a written explanation, as well as two simple tests to identify valid metrics. Figure 1 shows a basic program logic model – a widely used illustration of the stages and steps in planning and implementing programs and projects used in many fields – applied in this instance to social media M&E.

Program logic models typically identify five or six stages in planning and implementing what is generically called a program. The most common stages identified are inputs, activities, outputs, outcomes, and impact. Some separate short-term outcomes – often referred to as outtakes in public relations – and medium to long term outcomes.

Substitution error occurs when metrics (numbers), or qualitative indicators of successful activities or outputs, are presented as evidence of outcomes or impact. In particular, output indicators are frequently substituted as outcome indicators. For example, in public relations, the widely used metrics of reach, impressions, and sentiment are examples of those that are erroneously claimed to be indicators of outcomes.

A key to understanding the important difference between activities and outputs, on one hand, and outcomes is what I call the ‘Doer test’ which, as the name suggests, involves identifying the doer of the action. The ‘doer test’ reveals that:

1.) Activities and outputs are what you do and create as a would-be communicator.

2.) Outcomes are what your target audiences do because of your activities and outputs.

Another simple test to identify whether a metric or indicator relates to outputs or outcomes is the ‘Site test’. The ‘Site test’ identifies where the reported action or phenomenon occurs. If it occurs in media, it is an output. Media are channels that distribute (put out) information and messages. Media content may be sent in the direction of audiences. But it cannot be assumed that messages are received, or that messages in media equate to audience awareness, attitudes, or intentions for many reasons – such as selective attention, cognitive dissonance, confirmation bias, and belief persistence. Outcomes occur in the minds and behaviour of target audiences.

Figure 1. Dissected program logic model showing the ‘doer’ and the ‘site’ of activities, outputs, and outcomes.

Reach, impressions and sentiment (also referred to as tone or positivity) are media metrics and indicators. They report the potential size of the audience reached; the number of times your messages were potentially exposed to the target audience; and the extent to which media content is favourable, or unfavourable, towards your organization and/or its activities. Media metrics do not tell us what audiences actually saw or heard.

Similarly, the so-called sentiment of media content, which is often presented as an outcome of PR, is no indication of the sentiment in target audiences. In fact, the term sentiment is erroneously applied to media content because sentiment is “an attitude, thought, or judgment prompted by feeling”[3] that occurs in humans. The qualitative characteristics of media content are more accurately described as tone, or as positive, negative, or neutral.

In summary, as Figure 1 shows, what you do and create such as planning and producing content and information materials are activities. What appears in media or other channels, including content placed by PR practitioners, are outputs. What the audience thinks and does as a result are outcomes. Audience reception, reaction and response can be cognitive(thinking); affective (emotional connection); conative (forming an intention); or behavioural.

Impact refers to what happens, wholly or in part, as a result of the outcomes you achieve through communication. Often, impact is ‘downstream’, occurring some time later and as a result of multiple influences. While impact is often difficult to attribute, PR and communication practitioners at least need to be able to report outcomes to show effectiveness.

To show effectiveness, PR and communication practitioners need to look beyond media, which are a half-way point in public communication. Media metrics and indicators are, at best, a poor proxy for outcomes, based on assumptions. At worst, they are half-way evaluation. Collection of data that show audience reception, reaction, and response allows practitioners to go all the way in M&E to show results and value.

Jim Macnamara, Ph.D., is a Distinguished Professor in the School of Communication at the

[1] Cutlip, M., Center, A., & Broom, G. (1985). Effective public relations (6th ed.). Prentice-Hall, p. 295.

[2] Grunig, J. (2008). Conceptualizing quantitative research in public relations. In B. van Ruler, A. Verčič, & D. Verčič (Eds.), Public relations metrics, research and evaluation (pp. 88–119). Routledge, p. 89.

[3] Sentiment. In Merriam-Webster Dictionary. https://www.merriam-webster.com/dictionary/sentiment