The Behavioral Communications research program is sponsored by ExxonMobil, Public Affairs Council, Mosaic, and Gagen MacDonald.

This is the second blog in a three-part series that launches IPR’s Behavioral Communications research program. Each week will focus on a different aspect of Behavioral Communications.

Download PDF: Part Two: The Backfire Effect and How to Change Minds

Back to our roots: the Science Beneath the Art of Public RelationsTM

While public relations once called itself an applied social science, it now risks being left behind as anachronistic. After decades of fine tuning its execution in media relations, mapping influencers, developing detailed plans to segment and target separate audiences for messaging, PR has not kept pace with scientific discoveries in behavioral economics, neuroscience and narrative theory. But now, by connecting three historic accidents and discoveries, we can revitalize this social science to bring far more effectiveness and scientific underpinning to the art of narrative that lies at the very core of successful public relations and all communications. This is a three-part series revealing three compelling lessons communicators can learn from three historic scientific events.

Part Two: The Backfire Effect & How to Change Minds

Changing someone’s mind, persuading them to rethink their position, can feel nearly impossible. While ubiquity of information should provide enough public domain evidence to solve every argument, the opposite has happened; facts polarize people rather than bring them together in a moment of epiphany. The English philosopher Francis Bacon articulated this four centuries ago, writing:

“The human understanding when it has once adopted an opinion (either as being the received opinion or as being agreeable to itself) draws all things else to support and agree with it. And though there be a greater number and weight of instances to be found on the other side, yet these it either neglects and despises, or else by some distinction sets aside and rejects; in order that by this great and pernicious predetermination the authority of its former conclusions may remain inviolate.”

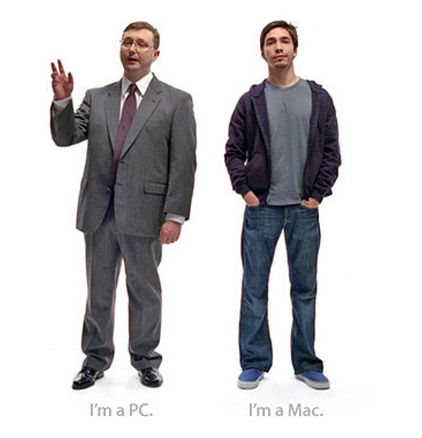

In 1979, professor of psychology and author Charles G. Lord sought answers[1] as to whether we might overcome the Bacon principle, or whether humans are always held hostage to their initial beliefs even in the face of compelling and contradictory evidence. After identifying two groups of respondents into their respective beliefs as to whether capital punishment is an effective deterrent to crime, he then supplied each group with a summary of research showing either that capital punish is or is not effective. That was followed by a more robust, scientifically sound piece of research that supported the summary. Then, he exposed each group to different research with opposite findings. Rather than softening their initial beliefs when evidence challenged them, each group discounted the research that did not align with their pre-existing beliefs, saying it was not as sound as the research that agreed with them. Scientists call this phenomenon “confirmation bias.” Lord and his co-researchers determined that objective evidence “will frequently fuel rather than calm the fires of debate.”

Since then, an entire field of research around confirmation bias (also sometimes called “motivated reasoning”) has sprung up. While it may not have been particularly surprising that people cling to their beliefs to the degree that they filter out any evidence that challenges their beliefs, an unexpected finding of the experiment was a backfire. Indeed it is now called the “backfire effect.” Research has shown that when people are shown evidence they may be wrong, they not only discount that evidence, they become even more extreme in their original belief.

Drew Westen, director of the departments of psychology and psychiatry at Emory University, performed an updated version of Lord’s experiment using fMRI brain scans. He had subjects self-identify as to political views and split them into two groups. He showed them their rival party’s presidential candidate reversing himself on an issue. He then showed them their own favorite candidate also reversing himself. Just as with Lord’s experiment, both groups clung to their initial beliefs in the face of new evidence undermining those beliefs. They saw their favorite candidate’s reversal of views as something smart, while condemning the flip-flops of the other candidate. When peering into what was going on in their brains during all this, Westen observed, “We did not see any increased activation of the parts of the brain normally engaged during reasoning. What we saw instead was a network of emotion circuits lighting up, including circuits hypothesized to be involved in regulating emotion, and circuits known to be involved in resolving conflicts.”

When partisan subjects saw their own favorite candidate “flip-flopping” on an issue, Westen’s research[2] showed correlations in the brain with areas that govern dissonance and even pain (the anterior cingulate cortex). The theory goes, therefore, that we tell ourselves little lies and reject contradictory evidence to make that dissonance, that pain of being wrong, go away. Worse, says Westen, once we do that, another part of the brain (ventral striatum) kicks in with brain chemical rewards (dopamine) to reinforce that little lie. The implication is that humans are wired through evolutionary development to resist being proven wrong.

Jason Reifler, assistant professor of political science at Georgia State University, has also pushed the investigation into motivated reasoning. In 2011[3], he also encountered a strong “backfire effect” when presenting subjects with evidence they were incorrect. Even if the evidence appeared to be incontrovertible, subjects still discounted a truth they could find easily in the public domain rather than change their minds. They, too, dug in their heels and reported feeling even more convinced and determined than ever after seeing evidence contradicting their views. But Reifler did discover an interesting avenue to opening minds. He found that if you first primed subjects with self-affirming attributes (e.g. letting them write about value important to them and an instance when they felt particularly good about themselves) they were more flexible and more willing to reconsider their views. He attributes this to disassociating the identity of the person from their view. If you do not do this, he theorizes, then a person’s identity and self-esteem is inextricably linked to the view they’ve espoused, so attacking their view amounts to attacking them as a person. Reifler also found, without being to explain why, that graphical evidence tends to persuade more effectively than text.

[1] “Biased Assimilation and Attitude Polarization: The Effects of Prior Theories on Subsequently Considered Evidence” by Lord, Ross, Lepper, 1979

[2] “Neural Bases of Motivated Reasoning: An fMRI Study of Emotional Constraints on Partisan Political

Judgment in the 2004 U.S. Presidential Election,” Westen et al, 2006

[3] “Opening the Political Mind? The effects of self-affirmation and graphical information on factual misperceptions,” Jason Reifler and Brendan Nyhan, 2011

Christopher Graves is the Global Chairman of Ogilvy Public Relations, Chair of The PR Council and a Trustee for IPR.