Latest Evaluation Models – UK Government Evaluation Cycle, EU Guidelines, and More

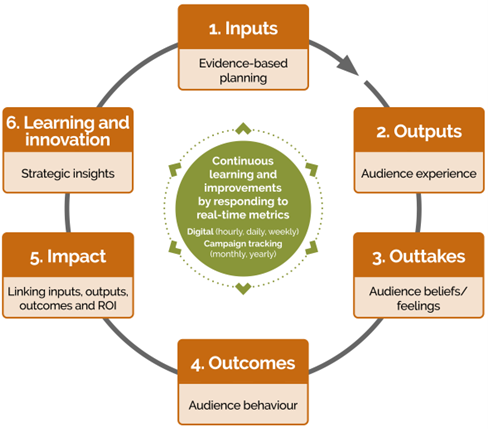

Advancements continue to be made in measurement and evaluation, a longstanding hot topic and an identified area for improvement in public relations and related practices.While having multiple models and frameworks can lead to confusion over which is best, three new tools offer both theoretical and practical contributions to advance the field.In August, the UK Government Communication Service (GCS) introduced its new GCS Evaluation Cycle [1]. Previously relying on a traditional five-stage, linear program logic model since 2016, the GCS has now adopted a six-stage cycle. This updated model retains essential stages—inputs, outputs, outtakes, outcomes, and impact— while adding “learning and innovation” and presenting the stages in a continuous cycle.This echoes the measurement, evaluation, and learning (MEL) model adopted by the World Health Organization (WHO) in 2022 for tracking its public health communication during the COVID-19 pandemic and for World Health Days [2].The addition of learning as an explicit stage in the evaluation process shifts the focus from a ‘rear view mirror’ reporting approach to generating insights to inform future strategic planning and enabling continuous improvement and innovation.Figure 1. UK Government Communication Service Evaluation Cycle.

Advancements continue to be made in measurement and evaluation, a longstanding hot topic and an identified area for improvement in public relations and related practices.While having multiple models and frameworks can lead to confusion over which is best, three new tools offer both theoretical and practical contributions to advance the field.In August, the UK Government Communication Service (GCS) introduced its new GCS Evaluation Cycle [1]. Previously relying on a traditional five-stage, linear program logic model since 2016, the GCS has now adopted a six-stage cycle. This updated model retains essential stages—inputs, outputs, outtakes, outcomes, and impact— while adding “learning and innovation” and presenting the stages in a continuous cycle.This echoes the measurement, evaluation, and learning (MEL) model adopted by the World Health Organization (WHO) in 2022 for tracking its public health communication during the COVID-19 pandemic and for World Health Days [2].The addition of learning as an explicit stage in the evaluation process shifts the focus from a ‘rear view mirror’ reporting approach to generating insights to inform future strategic planning and enabling continuous improvement and innovation.Figure 1. UK Government Communication Service Evaluation Cycle.

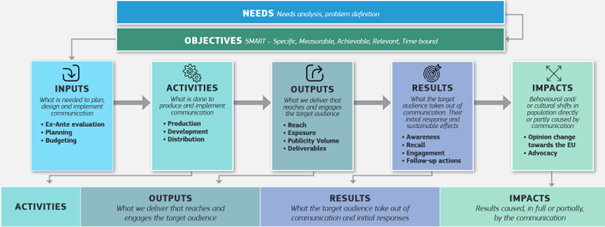

The second useful recent addition to the measurement, evaluation, and learning armoury for communicators is the latest version of the European Commission’s indicators guide. This retains a traditional five-stage program logic model (albeit with outcomes referred to as “results”) and adds brief descriptions of what each stage potentially involves. Most importantly and usefully, the EC Indicators Guide provides a table under the logic model with lists of typical indicators (quantitative and qualitative) that can demonstrate effectiveness at each stage. The Indicators Guide is available open source online.The EC Indicators Guide is similar to the “taxonomy” of metrics and indicators published by the International Association for Measurement and Evaluation of Communication (AMEC) [3]. Stay tuned because the AMEC taxonomy (a categorized list) of metrics and indicators relevant to various types of communication ranging from media publicity and websites to multi-media campaigns has been substantially updated and expanded and the new version will be published online soon.Figure 2. EU Measurement and Evaluation Indicators Guide [4].

The second useful recent addition to the measurement, evaluation, and learning armoury for communicators is the latest version of the European Commission’s indicators guide. This retains a traditional five-stage program logic model (albeit with outcomes referred to as “results”) and adds brief descriptions of what each stage potentially involves. Most importantly and usefully, the EC Indicators Guide provides a table under the logic model with lists of typical indicators (quantitative and qualitative) that can demonstrate effectiveness at each stage. The Indicators Guide is available open source online.The EC Indicators Guide is similar to the “taxonomy” of metrics and indicators published by the International Association for Measurement and Evaluation of Communication (AMEC) [3]. Stay tuned because the AMEC taxonomy (a categorized list) of metrics and indicators relevant to various types of communication ranging from media publicity and websites to multi-media campaigns has been substantially updated and expanded and the new version will be published online soon.Figure 2. EU Measurement and Evaluation Indicators Guide [4].

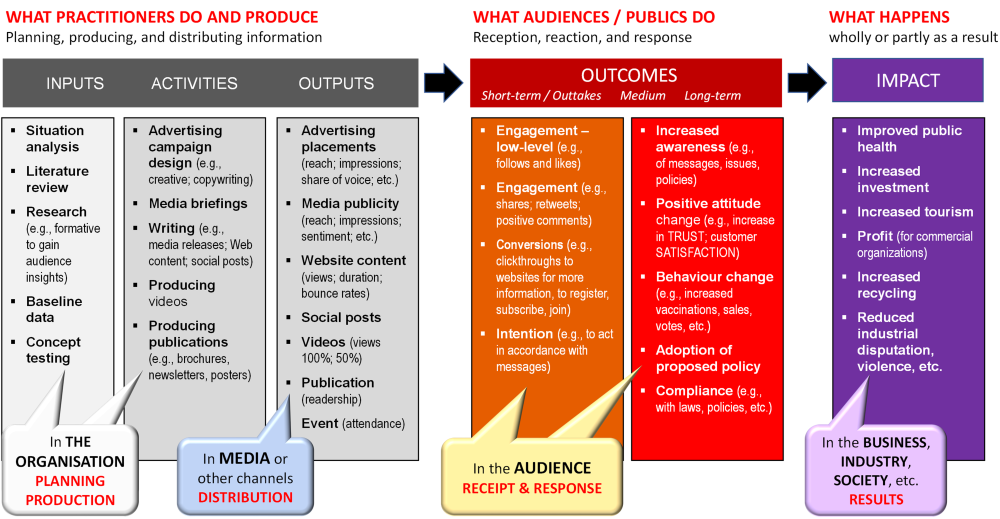

Another practical model addresses one of the most persistent causes of invalid measurement – what PR academics refer to as “substitution error”. In the words of eminent US PR scholar, Jim Grunig, this involves “a metric gathered at one level of analysis to [allegedly] show an outcome at a higher level of analysis”. An example is claiming media reach or impressions as an outcome. This is invalid because reach and impressions are estimates of the potential audience based on media circulation or audience data, but the data do not provide any evidence that people actually saw the content or, even if they did, whether it had any effect.The ‘dissected’ program logic model for public communication published in Public Relations Review in 2023 (see Figure 3) is based on two questions or tests: (1) Who is doing the thing reported (the Doer Test)? And (2) Where is the reported metric occurring (the Site Test)? The ‘dissected’ logic model shows typical communication inputs and activities as well as outputs that are planned, produced, and distributed by organizations. They are things practitioners do and they report what is in media of some kind (e.g. press, websites, social media, etc.). Outtakes and outcomes are measures of what audiences do in terms of reception, reaction, and response, which are quite separate and distinct stages, while impacts are a further stage of flow-on effects in industry, policy, or society. The ‘dissected’ program logic model provides a simple way to check what metrics and indicators are relevant at each stage.Figure 3. The ‘dissected program logic model of typical public communication activities [5].

Another practical model addresses one of the most persistent causes of invalid measurement – what PR academics refer to as “substitution error”. In the words of eminent US PR scholar, Jim Grunig, this involves “a metric gathered at one level of analysis to [allegedly] show an outcome at a higher level of analysis”. An example is claiming media reach or impressions as an outcome. This is invalid because reach and impressions are estimates of the potential audience based on media circulation or audience data, but the data do not provide any evidence that people actually saw the content or, even if they did, whether it had any effect.The ‘dissected’ program logic model for public communication published in Public Relations Review in 2023 (see Figure 3) is based on two questions or tests: (1) Who is doing the thing reported (the Doer Test)? And (2) Where is the reported metric occurring (the Site Test)? The ‘dissected’ logic model shows typical communication inputs and activities as well as outputs that are planned, produced, and distributed by organizations. They are things practitioners do and they report what is in media of some kind (e.g. press, websites, social media, etc.). Outtakes and outcomes are measures of what audiences do in terms of reception, reaction, and response, which are quite separate and distinct stages, while impacts are a further stage of flow-on effects in industry, policy, or society. The ‘dissected’ program logic model provides a simple way to check what metrics and indicators are relevant at each stage.Figure 3. The ‘dissected program logic model of typical public communication activities [5].

While researchers need to be careful to not flood the field with ever-changing models, these latest developments have a strong orientation to guiding practice and serving as practical tools for measurement, evaluation, and learning.

While researchers need to be careful to not flood the field with ever-changing models, these latest developments have a strong orientation to guiding practice and serving as practical tools for measurement, evaluation, and learning.

Jim Macnamara is Distinguished Professor of Public Communication in the School of Communication at the University of Technology Sydney (UTS). He is a widely published author on evaluation and organizational listening including the books Evaluating Public Communication: Exploring New Models, Standards, and Best Practice (Routledge, 2018) and Organizational Listening II: Expanding the Concept Theory, and Practice (Peter Lang, New York, 2024).

References:[1] Government Communication Service. (2024). GCS Evaluation Cycle. https://gcs.civilservice.gov.uk/publications/gcs-evaluation-cycle [2] Macnamara, J. (2023). Learnings from three years leading evaluation of WHO communication during COVID-19. Keynote presentation to 2023 AMEC Global Summit, Miami, Florida.[3] Macnamara, J. (2016). A taxonomy of evaluation: Towards standards. Association for Measurement and Evaluation of Communication. https://amecorg.com/amecframework/home/supporting-material/taxonomy[4] European Commission. (2024). Communication, Monitoring, Indicators – Supporting Guide. https://commission.europa.eu/system/files/2019-10/communication_network_indicators_supporting_guide.pdf[5] Macnamara, J. (2023). A call for reconfiguring evaluation models, pedagogy, and practice: Beyond reporting media-centric outputs and fake impact scores. Public Relations Review, 49(2). https://doi.org/10.1016/j.pubrev.2023.102311

...

Jim Macnamara is Distinguished Professor of Public Communication in the School of Communication at the University of Technology Sydney (UTS). He is a widely published author on evaluation and organizational listening including the books Evaluating Public Communication: Exploring New Models, Standards, and Best Practice (Routledge, 2018) and Organizational Listening II: Expanding the Concept Theory, and Practice (Peter Lang, New York, 2024).

References:[1] Government Communication Service. (2024). GCS Evaluation Cycle. https://gcs.civilservice.gov.uk/publications/gcs-evaluation-cycle [2] Macnamara, J. (2023). Learnings from three years leading evaluation of WHO communication during COVID-19. Keynote presentation to 2023 AMEC Global Summit, Miami, Florida.[3] Macnamara, J. (2016). A taxonomy of evaluation: Towards standards. Association for Measurement and Evaluation of Communication. https://amecorg.com/amecframework/home/supporting-material/taxonomy[4] European Commission. (2024). Communication, Monitoring, Indicators – Supporting Guide. https://commission.europa.eu/system/files/2019-10/communication_network_indicators_supporting_guide.pdf[5] Macnamara, J. (2023). A call for reconfiguring evaluation models, pedagogy, and practice: Beyond reporting media-centric outputs and fake impact scores. Public Relations Review, 49(2). https://doi.org/10.1016/j.pubrev.2023.102311

...

This post is provided by the IPR Measurement Commission.It was a year of ongoing changes at the box office and in sports media, intersecting with national elections. Several case examples offered insights for the future of PR measurement.Small is Big…Few expected the second weekend of the $200 million budgeted film Joker: Folie à Deux to fail to reach #1 at the box office, especially as no major studio fare were released that weekend. In its place? Terrifier 3, a micro-budgeted film that spent only half a million dollars on promotion. The horror franchise featuring the character Art the Clown took an innovative yet straightforward approach to digital PR, instead of trying to recreate a traditional campaign on a smaller scale. This included one effort to get fans to dial the clown’s hotline where they heard a creepy message and received a one cent Venmo payment in return. Another featured Art the Clown simply walking by a billboard of Joker and shaking his head, the guerrilla PR gag receiving 138,000 likes on Instagram alone.Lesson: In the old days, small campaigns would often attempt to limit their budgets by merely scaling back the size or scope of a traditional campaign. With digital divides collapsing costs and promoting ingenuity: don’t do small, do different. Then, make sure your metrics match these unique approaches instead of merely replicating your macro approaches or formulas.…And Big is SmallConsider what it even means to be “big” anymore. Inside Out 2 helped save the summer box office by becoming the largest grossing animated film of all-time worldwide. However, even with its $652 million domestic gross means – based on the average ticket price – only about 15% or so of the country saw it in theaters, assuming there were no repeat customers. Let that sink in. A record-breaking film was still not seen in theaters by 85% of the country.Lesson: Apply this note to any product or category and a lot of success can be found in the margins. Examine your metrics. Are your measures capturing the margins or missing the nuances, even for situations that may seem like macro products or realities?Changing Demographics and ImpactsBoth films also well overperformed with Latino and Hispanic audiences, long one of the fastest growing populations in the country. While very different films, in their opening weekends, Latinos and Hispanics made up 48% of Terrifier 3’s and 38% of Inside Out 2’s audience, despite the community still only being about 20% of the overall U.S. population. Lesson: Contextualize your measures and note growing trends. Using these films as examples, horror films skew younger, and Hispanics are not only one of the fastest growing populations but also one of the nation’s youngest consumer populations. Furthermore, Hispanics are also more likely to have younger families with kids. Finally, both films beat tracking expectations in part because these audiences tended to supply better walk-up business at the box office, thus not fully being captured in metrics that tend to overly rely on digital pre-sales. Thus, create multi-dimensional metrics that fully capture your audience’s life, habits, digital footprint and purchasing routines to better understand people’s lives.Digital Live Sports Changes the Game…and PoliticsWhile live sports have slowed the avalanche of cord cutting over the last decade, 2024 saw accelerated shifts. While not new to this year, Amazon, Peacock and Paramount continued their advance in showing live games on their platforms. And Netflix’s airing of a live comedy roast of Tom Brady received over two million views in its debut night alone and led to more chatter in the following days.These fractious currents of information sources being used across sports, entertainment and culture were further seen in politics and the 2024 election. Few cases were more high-profile than podcasts, as interviewers that cross all these lanes, such as The Joe Rogan Experience, saw their platforms sometimes dominate traditional political news. The Rogan interview with Donald Trump obtained over 38 million views on YouTube alone in just over three days, not including audiences that listened on platforms like Spotify, where Rogan has over 14 million followers. Vice President Kamala Harris took a similar approach, holding an interview on the Call Her Daddy podcast and a town hall on The Breakfast Club. This was no surprise, as podcasts ranked similar to print publications as a top election information source this year.Lesson: With even live sports and entertainment now fully bridging the digital divide, as well as accelerating their intersection with politics in 2024, industry metrics need to weigh and apply their influence far beyond their traditional spheres.Here’s to these trends continuing – and new ones forming – in 2025!

This post is provided by the IPR Measurement Commission.It was a year of ongoing changes at the box office and in sports media, intersecting with national elections. Several case examples offered insights for the future of PR measurement.Small is Big…Few expected the second weekend of the $200 million budgeted film Joker: Folie à Deux to fail to reach #1 at the box office, especially as no major studio fare were released that weekend. In its place? Terrifier 3, a micro-budgeted film that spent only half a million dollars on promotion. The horror franchise featuring the character Art the Clown took an innovative yet straightforward approach to digital PR, instead of trying to recreate a traditional campaign on a smaller scale. This included one effort to get fans to dial the clown’s hotline where they heard a creepy message and received a one cent Venmo payment in return. Another featured Art the Clown simply walking by a billboard of Joker and shaking his head, the guerrilla PR gag receiving 138,000 likes on Instagram alone.Lesson: In the old days, small campaigns would often attempt to limit their budgets by merely scaling back the size or scope of a traditional campaign. With digital divides collapsing costs and promoting ingenuity: don’t do small, do different. Then, make sure your metrics match these unique approaches instead of merely replicating your macro approaches or formulas.…And Big is SmallConsider what it even means to be “big” anymore. Inside Out 2 helped save the summer box office by becoming the largest grossing animated film of all-time worldwide. However, even with its $652 million domestic gross means – based on the average ticket price – only about 15% or so of the country saw it in theaters, assuming there were no repeat customers. Let that sink in. A record-breaking film was still not seen in theaters by 85% of the country.Lesson: Apply this note to any product or category and a lot of success can be found in the margins. Examine your metrics. Are your measures capturing the margins or missing the nuances, even for situations that may seem like macro products or realities?Changing Demographics and ImpactsBoth films also well overperformed with Latino and Hispanic audiences, long one of the fastest growing populations in the country. While very different films, in their opening weekends, Latinos and Hispanics made up 48% of Terrifier 3’s and 38% of Inside Out 2’s audience, despite the community still only being about 20% of the overall U.S. population. Lesson: Contextualize your measures and note growing trends. Using these films as examples, horror films skew younger, and Hispanics are not only one of the fastest growing populations but also one of the nation’s youngest consumer populations. Furthermore, Hispanics are also more likely to have younger families with kids. Finally, both films beat tracking expectations in part because these audiences tended to supply better walk-up business at the box office, thus not fully being captured in metrics that tend to overly rely on digital pre-sales. Thus, create multi-dimensional metrics that fully capture your audience’s life, habits, digital footprint and purchasing routines to better understand people’s lives.Digital Live Sports Changes the Game…and PoliticsWhile live sports have slowed the avalanche of cord cutting over the last decade, 2024 saw accelerated shifts. While not new to this year, Amazon, Peacock and Paramount continued their advance in showing live games on their platforms. And Netflix’s airing of a live comedy roast of Tom Brady received over two million views in its debut night alone and led to more chatter in the following days.These fractious currents of information sources being used across sports, entertainment and culture were further seen in politics and the 2024 election. Few cases were more high-profile than podcasts, as interviewers that cross all these lanes, such as The Joe Rogan Experience, saw their platforms sometimes dominate traditional political news. The Rogan interview with Donald Trump obtained over 38 million views on YouTube alone in just over three days, not including audiences that listened on platforms like Spotify, where Rogan has over 14 million followers. Vice President Kamala Harris took a similar approach, holding an interview on the Call Her Daddy podcast and a town hall on The Breakfast Club. This was no surprise, as podcasts ranked similar to print publications as a top election information source this year.Lesson: With even live sports and entertainment now fully bridging the digital divide, as well as accelerating their intersection with politics in 2024, industry metrics need to weigh and apply their influence far beyond their traditional spheres.Here’s to these trends continuing – and new ones forming – in 2025!

Lightning Czabovsky, J.D., Ph.D., is an Associate Professor at the University of North Carolina at Chapel Hill. His work focuses on the intersection of diverse audiences, audience analysis, public opinion and creative PR strategies. His background crosses professional sectors, and he’s been published in many industry and scholarly outlets.

...

Lightning Czabovsky, J.D., Ph.D., is an Associate Professor at the University of North Carolina at Chapel Hill. His work focuses on the intersection of diverse audiences, audience analysis, public opinion and creative PR strategies. His background crosses professional sectors, and he’s been published in many industry and scholarly outlets.

...

This blog is provided by the IPR Measurement Commission.Accurate and timely media analysis is crucial to shaping public relations strategies and measuring audience impact. Artificial intelligence (AI) can efficiently sift through vast amounts of content in minutes, often reducing the time to identify trends and sentiment from hours or days to mere minutes (Whitaker, 2017).However, as organizations increasingly adopt AI for data processing and insights, it is essential to identify best practices around when to use AI and when to rely on human expertise. Human insight is often irreplaceable when analyzing nuanced topics or datasets. While technologies such as automation and machine learning have been successfully used in PR, an industry-wide hesitation exists around large-scale AI implementation due to accuracy gaps and transparency issues.After leading media measurement teams for 15-plus years, I’ve learned that AI usage doesn’t have to be an either-or approach. Strategically combining the strengths of AI with the critical thinking and creativity of PR professionals allows organizations to accelerate and enhance media analysis efforts, leading to more informed decision-making and impactful communication strategies.Humans Where Humans Make Sense; Machines Where Machines Make SenseResearchers found that human sentiment coding had an average accuracy rate of 85% compared to 59% for AI (van Atteveldt et al., 2021). It is important to note that while human coding accuracy ranks higher than the machine, AI outranks humans in terms of efficiency. An exploration of AI’s use in coding practices shows a 40% reduction in analysis time with AI (Kakhiani, 2024), and industry reviews recognize the superpower of AI to work 100 times faster than human coders (Diamandis, 2024; Kaoukji, 2023).So, what’s the lesson? The key is to use humans where humans make sense and machines where machines make sense. Different factors and contexts should be considered:— Humans make the most sense when you have more time, when the data volume is more manageable, when accuracy is crucial, when results will inform senior-level decision-making, and when topics are more complex or nuanced.— When a fast turnaround is of the essence — such as crisis response — or when you’re using massive datasets (or when topics are more clear-cut), AI with human supervision is likely a better option.It’s also imperative for organizations to enact AI usage and disclosure policies and introduce greater transparency into the AI models used, to maintain and build trust with audiences.“This means higher data standards, greater transparency and documentation of AI systems, measurement and auditing of its functions (and model performance), and enabling human oversight and ongoing monitoring,” explains Converseon founder and IPR Measurement Commission member Rob Key. “In the near future, it is likely that almost every leading organization will have a form of AI policy in place that will adhere closely to these standards.”Human vs. Machine: Best Practices and Factors to ConsiderIn most cases, AI and humans should be used side-by-side. Organizations should defer to human-in-the-loop AI models, which include human input in the model’s training and outputs and consider a range of factors when deciding whether to deploy machines or human resources. Here are the most impactful:1.) Timing and Speed: AI can process data much faster than humans, making it ideal for time-sensitive analyses.2.) Data Volume: AI excels at identifying patterns and trends in large datasets that may be missed by human analysts. — This makes machines ideal for tasks such as tracking mentions or identifying trends across wide datasets. But in complex industries such as healthcare or financial services, understanding the implications of regulations, policies, or industry-specific jargon is critical and is likely better suited to a human.3.) Accuracy: AI often struggles with nuanced interpretations, while human coders can apply context and critical thinking in analyses where subtlety matters. Human coders can also contextualize and verify automated results. — When performing sentiment analysis, automated tools struggle with nuances like sarcasm, cultural context, and double meanings.4.) Audience: AI might lack the sensitivity needed for certain audiences. If the analysis needs to resonate deeply with a specific demographic or requires a nuanced contextual understanding, human coders may be a better fit.5.) Decision Impact: If the results will drive significant business decisions, the depth of understanding that human analysts provide might be more appropriate. The stakes involved can justify the added time and resources.6.) Topic Complexity: AI excels in straightforward, data-driven analyses. For intricate or abstract subjects that require deep understanding or emotional intelligence, human analysts may be more effective. — Human curation is vital when assessing the credibility and impact of sources. Media measurement is more than just counting mentions or clicks: It’s about understanding who is speaking, their level of influence, and their quality of engagement.From my experience and available research, I consider it best practice to use machines for initial data collection, aggregation, and basic sentiment analysis, and incorporate human analysis for contextual understanding, sentiment refinement, and evaluating the importance of key opinion leaders or sources. It’s also important to regularly audit automated tools for accuracy.ConclusionIntegrating human expertise with automation is vital to delivering comprehensive and reliable media measurement. Media analysis companies can combine trusted human analysis and advanced AI capabilities to provide quality and timely results. However, organizations must also be transparent in their use of AI to support informed output consumption.Indeed, the rising prioritization of trusted AI — ensuring that AI systems are transparent, reliable, and ethically sound — means organizations must employ ethical guidelines regarding the usage of AI. By building trust in AI technologies and supplementing AI’s efficiency with human insight, organizations can harness the technology’s full potential while safeguarding against biases and inaccuracies, ultimately leading to more informed and impactful outcomes in media analysis.

This blog is provided by the IPR Measurement Commission.Accurate and timely media analysis is crucial to shaping public relations strategies and measuring audience impact. Artificial intelligence (AI) can efficiently sift through vast amounts of content in minutes, often reducing the time to identify trends and sentiment from hours or days to mere minutes (Whitaker, 2017).However, as organizations increasingly adopt AI for data processing and insights, it is essential to identify best practices around when to use AI and when to rely on human expertise. Human insight is often irreplaceable when analyzing nuanced topics or datasets. While technologies such as automation and machine learning have been successfully used in PR, an industry-wide hesitation exists around large-scale AI implementation due to accuracy gaps and transparency issues.After leading media measurement teams for 15-plus years, I’ve learned that AI usage doesn’t have to be an either-or approach. Strategically combining the strengths of AI with the critical thinking and creativity of PR professionals allows organizations to accelerate and enhance media analysis efforts, leading to more informed decision-making and impactful communication strategies.Humans Where Humans Make Sense; Machines Where Machines Make SenseResearchers found that human sentiment coding had an average accuracy rate of 85% compared to 59% for AI (van Atteveldt et al., 2021). It is important to note that while human coding accuracy ranks higher than the machine, AI outranks humans in terms of efficiency. An exploration of AI’s use in coding practices shows a 40% reduction in analysis time with AI (Kakhiani, 2024), and industry reviews recognize the superpower of AI to work 100 times faster than human coders (Diamandis, 2024; Kaoukji, 2023).So, what’s the lesson? The key is to use humans where humans make sense and machines where machines make sense. Different factors and contexts should be considered:— Humans make the most sense when you have more time, when the data volume is more manageable, when accuracy is crucial, when results will inform senior-level decision-making, and when topics are more complex or nuanced.— When a fast turnaround is of the essence — such as crisis response — or when you’re using massive datasets (or when topics are more clear-cut), AI with human supervision is likely a better option.It’s also imperative for organizations to enact AI usage and disclosure policies and introduce greater transparency into the AI models used, to maintain and build trust with audiences.“This means higher data standards, greater transparency and documentation of AI systems, measurement and auditing of its functions (and model performance), and enabling human oversight and ongoing monitoring,” explains Converseon founder and IPR Measurement Commission member Rob Key. “In the near future, it is likely that almost every leading organization will have a form of AI policy in place that will adhere closely to these standards.”Human vs. Machine: Best Practices and Factors to ConsiderIn most cases, AI and humans should be used side-by-side. Organizations should defer to human-in-the-loop AI models, which include human input in the model’s training and outputs and consider a range of factors when deciding whether to deploy machines or human resources. Here are the most impactful:1.) Timing and Speed: AI can process data much faster than humans, making it ideal for time-sensitive analyses.2.) Data Volume: AI excels at identifying patterns and trends in large datasets that may be missed by human analysts. — This makes machines ideal for tasks such as tracking mentions or identifying trends across wide datasets. But in complex industries such as healthcare or financial services, understanding the implications of regulations, policies, or industry-specific jargon is critical and is likely better suited to a human.3.) Accuracy: AI often struggles with nuanced interpretations, while human coders can apply context and critical thinking in analyses where subtlety matters. Human coders can also contextualize and verify automated results. — When performing sentiment analysis, automated tools struggle with nuances like sarcasm, cultural context, and double meanings.4.) Audience: AI might lack the sensitivity needed for certain audiences. If the analysis needs to resonate deeply with a specific demographic or requires a nuanced contextual understanding, human coders may be a better fit.5.) Decision Impact: If the results will drive significant business decisions, the depth of understanding that human analysts provide might be more appropriate. The stakes involved can justify the added time and resources.6.) Topic Complexity: AI excels in straightforward, data-driven analyses. For intricate or abstract subjects that require deep understanding or emotional intelligence, human analysts may be more effective. — Human curation is vital when assessing the credibility and impact of sources. Media measurement is more than just counting mentions or clicks: It’s about understanding who is speaking, their level of influence, and their quality of engagement.From my experience and available research, I consider it best practice to use machines for initial data collection, aggregation, and basic sentiment analysis, and incorporate human analysis for contextual understanding, sentiment refinement, and evaluating the importance of key opinion leaders or sources. It’s also important to regularly audit automated tools for accuracy.ConclusionIntegrating human expertise with automation is vital to delivering comprehensive and reliable media measurement. Media analysis companies can combine trusted human analysis and advanced AI capabilities to provide quality and timely results. However, organizations must also be transparent in their use of AI to support informed output consumption.Indeed, the rising prioritization of trusted AI — ensuring that AI systems are transparent, reliable, and ethically sound — means organizations must employ ethical guidelines regarding the usage of AI. By building trust in AI technologies and supplementing AI’s efficiency with human insight, organizations can harness the technology’s full potential while safeguarding against biases and inaccuracies, ultimately leading to more informed and impactful outcomes in media analysis.

For more than 15 years, Angela Dwyer has balanced human expertise and automation in PR measurement to help brands prove value and improve communications performance. Angela is Head of Insights at Fullintel and director of the IPR Measurement Commission. She has developed measurement systems, expanded global companies, and designed science-backed metrics.

...

For more than 15 years, Angela Dwyer has balanced human expertise and automation in PR measurement to help brands prove value and improve communications performance. Angela is Head of Insights at Fullintel and director of the IPR Measurement Commission. She has developed measurement systems, expanded global companies, and designed science-backed metrics.

...

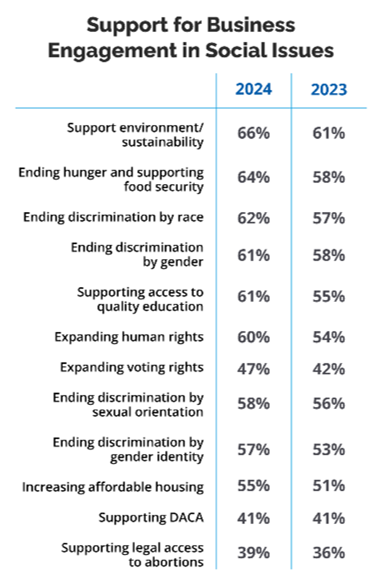

This blog is provided by the IPR Center for Diversity, Equity, and Inclusion.Despite backlash from leaders who have called companies “woke” for supporting social causes, public expectations for corporate engagement in these issues has increased in the past year, according to a new Public Affairs Council poll. Of the 12 social issues tested in the survey, 11 saw increases in public support for corporate engagement. Support for the remaining issue — creating pathways to citizenship through DACA — held steady with 41% approving of business involvement and 38% opposing it.

This blog is provided by the IPR Center for Diversity, Equity, and Inclusion.Despite backlash from leaders who have called companies “woke” for supporting social causes, public expectations for corporate engagement in these issues has increased in the past year, according to a new Public Affairs Council poll. Of the 12 social issues tested in the survey, 11 saw increases in public support for corporate engagement. Support for the remaining issue — creating pathways to citizenship through DACA — held steady with 41% approving of business involvement and 38% opposing it.

The 2024 Public Affairs Pulse Survey, conducted Sept. 1-3, 2024 by Morning Consult, provides an in-depth look at American public opinion on challenges and issues facing business, government, and society.Social causes with the largest jumps in public support were efforts to end hunger, provide access to quality education, and support human rights. Each of these issues saw gains of six percentage points since 2023. Causes with gains of five points included actions to protect the environment, end racial discrimination and expand voting rights.When responses are sorted by political affiliation, differences of opinion are huge.Approval gaps between Democrats and Republicans range from 13 percentage points (supporting education and food security) to 27 percentage points (ensuring access to abortions).Other social issues with large political divides include actions to end discrimination by gender identity (26 percentage points) and actions to support DACA (25 percentage points).

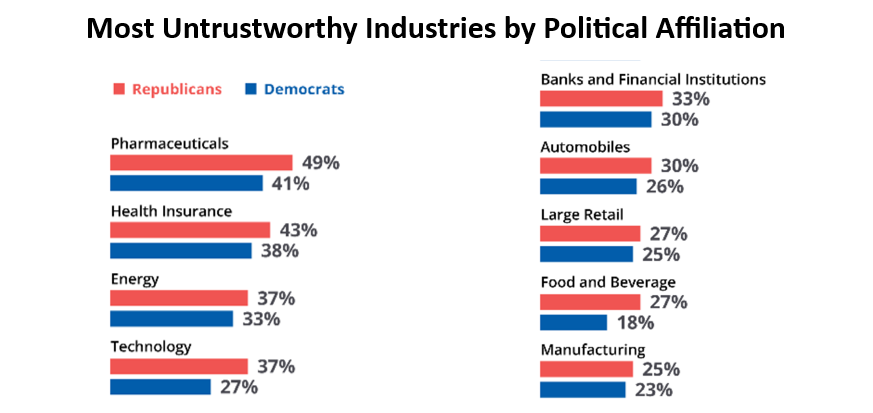

Are the Democrats Now the Pro-Business Party?57% of Americans consider the Republican Party to be pro-business, while only 43% believe the Democratic Party is pro-business. These results are similar to the 2023 findings and support the notion that the GOP is still more likely to support corporations and their policy goals. However, 69% of Democrats now say their party is pro-business, and only 49% of Democrats say Republicans are pro-business.When we examine overall favorability of business, Republicans are responding as expected. While 55% of GOP members have a generally favorable opinion of major companies, only 48% of Democrats agree.The picture becomes hazier, however, when we look at Republican and Democratic attitudes about how well major companies are carrying out basic business functions. Republicans are less likely than Democrats to believe major companies are effectively providing useful products and services (54% vs. 61%), serving customers (51% vs. 54%), creating jobs (46% vs. 48%) and serving stockholders (46% vs. 48%).Now let’s look at data on perceived untrustworthiness of corporations by industry and political affiliation. Given the pro-business reputation of the GOP, it’s surprising that Republicans are more distrustful of major companies in all nine sectors tested: pharmaceuticals, health insurance, energy, technology, banks and financial institutions, automobiles, large retail, food and beverage and manufacturing.

The 2024 Public Affairs Pulse Survey, conducted Sept. 1-3, 2024 by Morning Consult, provides an in-depth look at American public opinion on challenges and issues facing business, government, and society.Social causes with the largest jumps in public support were efforts to end hunger, provide access to quality education, and support human rights. Each of these issues saw gains of six percentage points since 2023. Causes with gains of five points included actions to protect the environment, end racial discrimination and expand voting rights.When responses are sorted by political affiliation, differences of opinion are huge.Approval gaps between Democrats and Republicans range from 13 percentage points (supporting education and food security) to 27 percentage points (ensuring access to abortions).Other social issues with large political divides include actions to end discrimination by gender identity (26 percentage points) and actions to support DACA (25 percentage points).

Are the Democrats Now the Pro-Business Party?57% of Americans consider the Republican Party to be pro-business, while only 43% believe the Democratic Party is pro-business. These results are similar to the 2023 findings and support the notion that the GOP is still more likely to support corporations and their policy goals. However, 69% of Democrats now say their party is pro-business, and only 49% of Democrats say Republicans are pro-business.When we examine overall favorability of business, Republicans are responding as expected. While 55% of GOP members have a generally favorable opinion of major companies, only 48% of Democrats agree.The picture becomes hazier, however, when we look at Republican and Democratic attitudes about how well major companies are carrying out basic business functions. Republicans are less likely than Democrats to believe major companies are effectively providing useful products and services (54% vs. 61%), serving customers (51% vs. 54%), creating jobs (46% vs. 48%) and serving stockholders (46% vs. 48%).Now let’s look at data on perceived untrustworthiness of corporations by industry and political affiliation. Given the pro-business reputation of the GOP, it’s surprising that Republicans are more distrustful of major companies in all nine sectors tested: pharmaceuticals, health insurance, energy, technology, banks and financial institutions, automobiles, large retail, food and beverage and manufacturing.

For example, while 37% of Republicans view tech companies as more untrustworthy than average, only 27% of Democrats agree. That amounts to a 10 percentage-point difference on the issue of distrust.If you’re keeping score at home, GOP voters are more likely to criticize many aspects of business performance, from providing useful products and services to creating jobs. They are also more likely to label companies — in every major sector — as being more untrustworthy than average. And, finally, many of them strongly oppose corporate involvement in social issues even when those issues are popular and companies have been supporting them for decades. Why, then, do so many Americans believe the GOP is the “party of business”?The answer lies in Republicans’ instinctive dislike and distrust of government regulation.Parties Have Divergent Views about RegulationEach year we ask Pulse Survey respondents to score each of the same nine industries for whether they are more in need of regulation than other industries. In 2024, the pharmaceutical industry is once again considered the sector most in need of regulation, followed by health insurance. Energy comes in third rather than fourth this year. The biggest change, however, involves the food and beverage industry, which was considered the sector least in need of more regulation last year (ninth out of nine industries), but this year it is in sixth place out of nine.Even when many Americans believe a sector is underregulated, Republicans often are satisfied with the current regulatory level or may even consider that industry too burdened by regulations. For example, 33% of the public currently believe the energy sector is underregulated and only 20% think it is overregulated. GOP voters disagree, with 30% saying it is overregulated and only 26% considering it underregulated.Democrats, meanwhile, take the opposite stance — they consistently believe a given sector is more in need of regulation than does the average American. In the case of energy, 43% of Democrats would like to see more regulation and only 12% think government is already regulating that sector too much.And so, what we have are two political parties that view themselves as pro-business. The first doesn’t seem to trust major companies very much but has a strong bias against regulation. The second trusts corporations more and clearly likes their products, services and engagement in social issues, but has a strong bias in favor of regulation.What this means is there is no longer a pro-business party that companies can generally count on to support their public policy goals. Depending on a host of factors including the saliency of the issue, partisan politics, media attention, and the political calendar, companies will have an increasingly difficult time sorting out their allies from their opponents.

For example, while 37% of Republicans view tech companies as more untrustworthy than average, only 27% of Democrats agree. That amounts to a 10 percentage-point difference on the issue of distrust.If you’re keeping score at home, GOP voters are more likely to criticize many aspects of business performance, from providing useful products and services to creating jobs. They are also more likely to label companies — in every major sector — as being more untrustworthy than average. And, finally, many of them strongly oppose corporate involvement in social issues even when those issues are popular and companies have been supporting them for decades. Why, then, do so many Americans believe the GOP is the “party of business”?The answer lies in Republicans’ instinctive dislike and distrust of government regulation.Parties Have Divergent Views about RegulationEach year we ask Pulse Survey respondents to score each of the same nine industries for whether they are more in need of regulation than other industries. In 2024, the pharmaceutical industry is once again considered the sector most in need of regulation, followed by health insurance. Energy comes in third rather than fourth this year. The biggest change, however, involves the food and beverage industry, which was considered the sector least in need of more regulation last year (ninth out of nine industries), but this year it is in sixth place out of nine.Even when many Americans believe a sector is underregulated, Republicans often are satisfied with the current regulatory level or may even consider that industry too burdened by regulations. For example, 33% of the public currently believe the energy sector is underregulated and only 20% think it is overregulated. GOP voters disagree, with 30% saying it is overregulated and only 26% considering it underregulated.Democrats, meanwhile, take the opposite stance — they consistently believe a given sector is more in need of regulation than does the average American. In the case of energy, 43% of Democrats would like to see more regulation and only 12% think government is already regulating that sector too much.And so, what we have are two political parties that view themselves as pro-business. The first doesn’t seem to trust major companies very much but has a strong bias against regulation. The second trusts corporations more and clearly likes their products, services and engagement in social issues, but has a strong bias in favor of regulation.What this means is there is no longer a pro-business party that companies can generally count on to support their public policy goals. Depending on a host of factors including the saliency of the issue, partisan politics, media attention, and the political calendar, companies will have an increasingly difficult time sorting out their allies from their opponents.

Doug Pinkham is president of the Public Affairs Council, the leading global association for public affairs professionals. The Council, both nonpartisan and nonpolitical, has more than 750 member companies, associations and universities. You can reach him at dpinkham@pac.org.

...

Doug Pinkham is president of the Public Affairs Council, the leading global association for public affairs professionals. The Council, both nonpartisan and nonpolitical, has more than 750 member companies, associations and universities. You can reach him at dpinkham@pac.org.

...

This blog is provided by the IPR Organizational Communication Research Center.Over the past decade, employers have realized the importance of the content employees share on their personal social media accounts about their jobs and the organization. Social media offers employees a platform to praise their organization’s strengths, but also openly criticize its shortcomings. It is, therefore, not surprising that many organizations develop a social media governance framework to guide employees’ social media behavior in the right direction.But how far can organizations go in managing their employees’ social media use? Tesla has faced scrutiny for monitoring the Facebook activity of its workforce, while Sherwin-Williams has been criticized for firing an employee who made TikToks at work. These real-life cases illustrate how employers are challenged to balance the fine line between protecting the organization from reputational or legal risks and respecting employees’ freedom to express themselves online.Through our research, An-Sofie Claeys and I helped organizations develop an effective social media governance framework that considers both employer and employee interests. In a recent study, we surveyed 502 Belgian employees to examine how people communicate about work on social media, and to what extent this online behavior is influenced by their employers’ social media governance tools. Based on our findings, we formulate three recommendations:1) Prepare the soil. Before considering any social media governance tools, organizations should “prepare the soil.” If they want positive social media behavior to grow among their workforce, they must first create a supportive work environment where employees feel a strong connection and relationship with the organization.2) Develop a stimulating social media governance framework. Once the foundations are built, organizations can develop a social media governance framework to stimulate employees’ social media use between well-communicated boundaries. A common mistake organizations make is adopting very restrictive measures (e.g., social media ban, punishments) out of fear that employees will post sensitive or negative things about their job or the organization. This overly restrictive approach is often misplaced. Our research, and that of other scholars, showed that it is quite unusual for employees to post negative work-related content on social media. In addition, restrictive measures rarely lead to the desired outcome. When organizations ban social media from the workplace or threaten punishments for inappropriate social media use, employees can feel that their freedom is being threatened. This can trigger a sense of resistance in them, eventually leading them to speak negatively about the organization on social media.But what approach should organizations take instead? Our research proposed two effective social media governance tools for organizations to consider:– Social media guidelines are a set of rules and recommended practices developed by an organization to guide employees’ social media use. Our research showed that such guidelines successfully stimulate positive online behavior among employees. Organizations will benefit most from these guidelines when they focus on what employees can do on social media rather than only addressing what they cannot do. It is, in other words, best to implement primarily stimulating guidelines (e.g., “As an employee, you can add value to public conversations and online discussions. With your knowledge and expertise, you can provide a framework for others on social media”) and only a limited number of restrictive guidelines (e.g., “You are not, under any circumstances, allowed to disclose confidential or internal information on social media”).– Rewards are a good way for organizations to show appreciation for their employees’ positive social media behaviors. Employees who act as organizational ambassadors on social media can, for instance, be honored with shout-outs or awards at a company event. Other examples of rewards are extra vacation days or financial bonuses. However, organizations should keep in mind that such rewards could undo the positive effects of employee ambassadorship and may even backfire. Amazon, for instance, faced backlash after it came to light that they were paying warehouse workers to say positive things about the company on Twitter.3) Facilitate employee ambassadorship on social media. Organizations should make it easy for employees to become company ambassadors on social media. A good place to start are the organizations’ official social media pages. Does the organization regularly post relevant and pride-inducing content, for instance about sustainability-related initiatives, that employees would likely repost? It is also important to remember that many employees use social media just for fun. Company events or staff activities can be a little nudge for employees to post something positive about work, even if it is just a fun photo with colleagues.

This blog is provided by the IPR Organizational Communication Research Center.Over the past decade, employers have realized the importance of the content employees share on their personal social media accounts about their jobs and the organization. Social media offers employees a platform to praise their organization’s strengths, but also openly criticize its shortcomings. It is, therefore, not surprising that many organizations develop a social media governance framework to guide employees’ social media behavior in the right direction.But how far can organizations go in managing their employees’ social media use? Tesla has faced scrutiny for monitoring the Facebook activity of its workforce, while Sherwin-Williams has been criticized for firing an employee who made TikToks at work. These real-life cases illustrate how employers are challenged to balance the fine line between protecting the organization from reputational or legal risks and respecting employees’ freedom to express themselves online.Through our research, An-Sofie Claeys and I helped organizations develop an effective social media governance framework that considers both employer and employee interests. In a recent study, we surveyed 502 Belgian employees to examine how people communicate about work on social media, and to what extent this online behavior is influenced by their employers’ social media governance tools. Based on our findings, we formulate three recommendations:1) Prepare the soil. Before considering any social media governance tools, organizations should “prepare the soil.” If they want positive social media behavior to grow among their workforce, they must first create a supportive work environment where employees feel a strong connection and relationship with the organization.2) Develop a stimulating social media governance framework. Once the foundations are built, organizations can develop a social media governance framework to stimulate employees’ social media use between well-communicated boundaries. A common mistake organizations make is adopting very restrictive measures (e.g., social media ban, punishments) out of fear that employees will post sensitive or negative things about their job or the organization. This overly restrictive approach is often misplaced. Our research, and that of other scholars, showed that it is quite unusual for employees to post negative work-related content on social media. In addition, restrictive measures rarely lead to the desired outcome. When organizations ban social media from the workplace or threaten punishments for inappropriate social media use, employees can feel that their freedom is being threatened. This can trigger a sense of resistance in them, eventually leading them to speak negatively about the organization on social media.But what approach should organizations take instead? Our research proposed two effective social media governance tools for organizations to consider:– Social media guidelines are a set of rules and recommended practices developed by an organization to guide employees’ social media use. Our research showed that such guidelines successfully stimulate positive online behavior among employees. Organizations will benefit most from these guidelines when they focus on what employees can do on social media rather than only addressing what they cannot do. It is, in other words, best to implement primarily stimulating guidelines (e.g., “As an employee, you can add value to public conversations and online discussions. With your knowledge and expertise, you can provide a framework for others on social media”) and only a limited number of restrictive guidelines (e.g., “You are not, under any circumstances, allowed to disclose confidential or internal information on social media”).– Rewards are a good way for organizations to show appreciation for their employees’ positive social media behaviors. Employees who act as organizational ambassadors on social media can, for instance, be honored with shout-outs or awards at a company event. Other examples of rewards are extra vacation days or financial bonuses. However, organizations should keep in mind that such rewards could undo the positive effects of employee ambassadorship and may even backfire. Amazon, for instance, faced backlash after it came to light that they were paying warehouse workers to say positive things about the company on Twitter.3) Facilitate employee ambassadorship on social media. Organizations should make it easy for employees to become company ambassadors on social media. A good place to start are the organizations’ official social media pages. Does the organization regularly post relevant and pride-inducing content, for instance about sustainability-related initiatives, that employees would likely repost? It is also important to remember that many employees use social media just for fun. Company events or staff activities can be a little nudge for employees to post something positive about work, even if it is just a fun photo with colleagues.

Ellen Soens (ellen.soens@ugent.be) is a PhD candidate at the department of Translation, Interpreting and Communication of Ghent University in Belgium. Her research interests include employee social media use and social media governance. Her current research project, “Understanding and managing employees’ work-related social media use: Minimizing risks and maximizing opportunities”, is funded by the Research Foundation Flanders.

...

Ellen Soens (ellen.soens@ugent.be) is a PhD candidate at the department of Translation, Interpreting and Communication of Ghent University in Belgium. Her research interests include employee social media use and social media governance. Her current research project, “Understanding and managing employees’ work-related social media use: Minimizing risks and maximizing opportunities”, is funded by the Research Foundation Flanders.

...

In a new report “Navigating a Changing Media Landscape,” The Institute for Public Relations and Peppercomm conducted research on 22 Chief Communications Officers (CCOs) and 22 media relations experts in June and July 2024.The report explores their CEOs’ and Boards of Directors’ (BODs) perspectives on strategies for combating misinformation and disinformation, methods for measuring media relations effectiveness, and predictions for the future of media. Specifically, the report shows how CCOs and media relations specialists envision the future of media relations over the next 18 months and five years, highlighting the growing challenges posed by increased disinformation, polarization, and the expanding role of AI in communication strategies.

Below are some key findings from the report:Shrinking Newsrooms: Journalists are stretched thin, resulting in fewer opportunities for earned media and less in-depth reporting. CCOs are finding it increasingly difficult to secure meaningful media coverage.Increased Shift Toward Paid and Sponsored Content: With fewer traditional media opportunities, organizations are investing more heavily in paid and sponsored content.Mixed Adaptation by Leadership: While some CEOs and Board of Directors are open to new media strategies, others remain resistant, emphasizing the need for CCOs to educate their leadership teams on the future of media.Personalization is Key: As newsrooms continue to shrink and journalist turnover rates increase, media relations professionals are required to engage in more personalized and proactive outreach. This also includes educating less experienced reporters on industry-specific topics and being more creative in securing coverage.Rising Sensationalism and Misinformation: The shift toward “clickbait” content and the rise of AI-generated media have heightened the need for vigilance in media monitoring as professionals are increasingly focused on combating misinformation and disinformation.Emerging Role of AI in Media Relations: AI is expected to play a transformative role in media relations, but currently that role is limited. Executives are just beginning to explore AI’s potential for ideation, content creation, and media monitoring, though concerns remain about its impact on disinformation and the erosion of journalistic standards.

In a new report “Navigating a Changing Media Landscape,” The Institute for Public Relations and Peppercomm conducted research on 22 Chief Communications Officers (CCOs) and 22 media relations experts in June and July 2024.The report explores their CEOs’ and Boards of Directors’ (BODs) perspectives on strategies for combating misinformation and disinformation, methods for measuring media relations effectiveness, and predictions for the future of media. Specifically, the report shows how CCOs and media relations specialists envision the future of media relations over the next 18 months and five years, highlighting the growing challenges posed by increased disinformation, polarization, and the expanding role of AI in communication strategies.

Below are some key findings from the report:Shrinking Newsrooms: Journalists are stretched thin, resulting in fewer opportunities for earned media and less in-depth reporting. CCOs are finding it increasingly difficult to secure meaningful media coverage.Increased Shift Toward Paid and Sponsored Content: With fewer traditional media opportunities, organizations are investing more heavily in paid and sponsored content.Mixed Adaptation by Leadership: While some CEOs and Board of Directors are open to new media strategies, others remain resistant, emphasizing the need for CCOs to educate their leadership teams on the future of media.Personalization is Key: As newsrooms continue to shrink and journalist turnover rates increase, media relations professionals are required to engage in more personalized and proactive outreach. This also includes educating less experienced reporters on industry-specific topics and being more creative in securing coverage.Rising Sensationalism and Misinformation: The shift toward “clickbait” content and the rise of AI-generated media have heightened the need for vigilance in media monitoring as professionals are increasingly focused on combating misinformation and disinformation.Emerging Role of AI in Media Relations: AI is expected to play a transformative role in media relations, but currently that role is limited. Executives are just beginning to explore AI’s potential for ideation, content creation, and media monitoring, though concerns remain about its impact on disinformation and the erosion of journalistic standards.

This summary is provided by the IPR Organizational Communication Research Center.Dr. Chuqing Dong and colleagues studied how employees perceive corporate social responsibility (CSR), how much employees trust their employer, and how this affects their decision to remain at their jobs.An online survey of 740 full-time employees working at medium or large organizations in the United States was conducted in March 2020. Key findings include:1.) When employees believed employer CSR efforts were for external gain, they were more likely to distrust their organization.2.) When CSR efforts were seen as intrinsically motivated (originating from genuine organizational values within the organization), employees were more likely to trust their employer.3.) Observing self-serving CSR actions eroded employees’ trust in their employer and reduced employees’ confidence in their employer’s ability to handle crises and difficult situations.4.) Employees had greater intentions to leave their organization when they had diminished trust in their employers.Implications for PracticeThis study highlights the role of perceptions of character and morality that employees place on their organization. It also highlights the complexities between an employee and their decision to seek work elsewhere. Organizations and leaders should demonstrate consistency in their CSR efforts with their internal mission, ethics, and purpose, and make sure that external efforts act as a mirror of the internal culture as opposed to appearing performative in nature. Secondly, during turbulent times, organizations should use communication to demonstrate their specific and concrete efforts to manage the turbulence, therefore allowing employees to trust that the situation is under control and not feel compelled to seek alternative employment.Read the full study here:

...

This summary is provided by the IPR Organizational Communication Research Center.Dr. Chuqing Dong and colleagues studied how employees perceive corporate social responsibility (CSR), how much employees trust their employer, and how this affects their decision to remain at their jobs.An online survey of 740 full-time employees working at medium or large organizations in the United States was conducted in March 2020. Key findings include:1.) When employees believed employer CSR efforts were for external gain, they were more likely to distrust their organization.2.) When CSR efforts were seen as intrinsically motivated (originating from genuine organizational values within the organization), employees were more likely to trust their employer.3.) Observing self-serving CSR actions eroded employees’ trust in their employer and reduced employees’ confidence in their employer’s ability to handle crises and difficult situations.4.) Employees had greater intentions to leave their organization when they had diminished trust in their employers.Implications for PracticeThis study highlights the role of perceptions of character and morality that employees place on their organization. It also highlights the complexities between an employee and their decision to seek work elsewhere. Organizations and leaders should demonstrate consistency in their CSR efforts with their internal mission, ethics, and purpose, and make sure that external efforts act as a mirror of the internal culture as opposed to appearing performative in nature. Secondly, during turbulent times, organizations should use communication to demonstrate their specific and concrete efforts to manage the turbulence, therefore allowing employees to trust that the situation is under control and not feel compelled to seek alternative employment.Read the full study here:

...

Zoom evaluated the changing workplace landscape and examined global trends in workplace flexibility, along with employee preferences regarding hybrid work.Two surveys were conducted in collaboration with Reworked INSIGHTS. The first surveyed 624 IT and C-suite leaders, while the second surveyed 1,870 overall employees in total during April–May 2024.Key findings include:1.) 64% of leaders said their workplace currently follows a hybrid workplace model. 2.) The top three hybrid work models were scheduled hybrid (25%), flextime hybrid (22%), and remote from anywhere (17%).– Scheduled hybrid refers to employees having designated days or times to work in-office.– Flextime hybrid allows employees to choose their working hours, but requires in-person attendance for specific meetings or collaborative events.3.) 75% of employees agreed that their organization’s current tools and technology for remote work need improvement.4.) 84% of employees said they get more work done in a hybrid/remote setting than in-office/onsite.Read the full report here

...

Zoom evaluated the changing workplace landscape and examined global trends in workplace flexibility, along with employee preferences regarding hybrid work.Two surveys were conducted in collaboration with Reworked INSIGHTS. The first surveyed 624 IT and C-suite leaders, while the second surveyed 1,870 overall employees in total during April–May 2024.Key findings include:1.) 64% of leaders said their workplace currently follows a hybrid workplace model. 2.) The top three hybrid work models were scheduled hybrid (25%), flextime hybrid (22%), and remote from anywhere (17%).– Scheduled hybrid refers to employees having designated days or times to work in-office.– Flextime hybrid allows employees to choose their working hours, but requires in-person attendance for specific meetings or collaborative events.3.) 75% of employees agreed that their organization’s current tools and technology for remote work need improvement.4.) 84% of employees said they get more work done in a hybrid/remote setting than in-office/onsite.Read the full report here

...

New York, NY – The Institute for Public Relations (IPR) will award the 2024 IPR Jack Felton Medal For Lifetime Achievement to Pauline Draper-Watts, Partner at Abacus Insights Partners. The award will be presented at the 62nd Annual IPR Distinguished Lecture and Awards Gala on Dec. 4, 2024, at The Lighthouse at Chelsea Piers in New York City.The IPR Jack Felton Gold Medal is an award for lifetime achievement given to an individual for their contributions in advancing the importance of significant use of research, measurement, and evaluation in public relations and corporate communication practice.“Pauline has paved the way for meaningful, science-driven measurement in the field of public relations,” said Angela Dwyer, IPR Measurement Commission Director and Head of Insights at Fullintel. “In addition to elevating her clients and the profession, she has promoted the success of others along the way as a thoughtful mentor. I couldn’t think of a more deserving person to receive this recognition of a lifetime.”Draper-Watts has more than 30 years of experience in research, measurement, analytics, and insights within the public relations industry. She is currently a Partner at Abacus Insights Partners, a boutique consulting firm. In this role, she counsels clients in transforming data into meaningful insights and providing analysis to inform future planning. This includes working in more specialized areas such as analyst relations and employee communications. Prior to joining Abacus Insights Partners, Draper-Watts led the measurement and analytics practice at Edelman. She also co-founded one of the earliest media measurement companies in Europe, Computerised Media Services (Precis), which was a founding member of AMEC (International Association for Measurement and Evaluation of Communication). Since then, she has continued to collaborate with various industry groups, including the IPR Measurement Commission, AMEC, ICCO, and PRSA, and played a role in crafting the first two iterations of the Barcelona Principles.“Pauline Draper-Watts embodies the spirit of the IPR Jack Felton Medal for Lifetime Achievement with her pioneering work in research and measurement setting a new standard for excellence in public relations,” said Dr. Tina McCorkindale, President and CEO of the Institute for Public Relations. “Her dedication to advancing our field through rigorous, data-driven insights has made a lasting impact, and we’re looking forward to recognizing her for her outstanding contributions.”Reserve your Seat(s) to the 62nd Annual IPR Distinguished Lecture & Awards DinnerTables of 10 are available for $6,000 and individual tickets are $600 for the awards dinner. The price includes a networking cocktail, the awards dinner, and Distinguished Lecture. Visit the IPR website to learn more about the 62nd IPR Annual Distinguished Lecture & Awards Gala.About the Institute for Public RelationsThe Institute for Public Relations is an independent, non-profit research foundation dedicated to fostering greater use of research and research-based knowledge in corporate communication and the public relations practice. IPR is dedicated to the science beneath the art of public relations. IPR provides timely insights and applied intelligence that professionals can put to immediate use. All research, including a weekly research letter, is available for free at instituteforpr.org.Media ContactBrittany Higginbotham Communications & Outreach ManagerInstitute for Public Relationsbrittany@instituteforpr.org352-392-0280

...

New York, NY – The Institute for Public Relations (IPR) will award the 2024 IPR Jack Felton Medal For Lifetime Achievement to Pauline Draper-Watts, Partner at Abacus Insights Partners. The award will be presented at the 62nd Annual IPR Distinguished Lecture and Awards Gala on Dec. 4, 2024, at The Lighthouse at Chelsea Piers in New York City.The IPR Jack Felton Gold Medal is an award for lifetime achievement given to an individual for their contributions in advancing the importance of significant use of research, measurement, and evaluation in public relations and corporate communication practice.“Pauline has paved the way for meaningful, science-driven measurement in the field of public relations,” said Angela Dwyer, IPR Measurement Commission Director and Head of Insights at Fullintel. “In addition to elevating her clients and the profession, she has promoted the success of others along the way as a thoughtful mentor. I couldn’t think of a more deserving person to receive this recognition of a lifetime.”Draper-Watts has more than 30 years of experience in research, measurement, analytics, and insights within the public relations industry. She is currently a Partner at Abacus Insights Partners, a boutique consulting firm. In this role, she counsels clients in transforming data into meaningful insights and providing analysis to inform future planning. This includes working in more specialized areas such as analyst relations and employee communications. Prior to joining Abacus Insights Partners, Draper-Watts led the measurement and analytics practice at Edelman. She also co-founded one of the earliest media measurement companies in Europe, Computerised Media Services (Precis), which was a founding member of AMEC (International Association for Measurement and Evaluation of Communication). Since then, she has continued to collaborate with various industry groups, including the IPR Measurement Commission, AMEC, ICCO, and PRSA, and played a role in crafting the first two iterations of the Barcelona Principles.“Pauline Draper-Watts embodies the spirit of the IPR Jack Felton Medal for Lifetime Achievement with her pioneering work in research and measurement setting a new standard for excellence in public relations,” said Dr. Tina McCorkindale, President and CEO of the Institute for Public Relations. “Her dedication to advancing our field through rigorous, data-driven insights has made a lasting impact, and we’re looking forward to recognizing her for her outstanding contributions.”Reserve your Seat(s) to the 62nd Annual IPR Distinguished Lecture & Awards DinnerTables of 10 are available for $6,000 and individual tickets are $600 for the awards dinner. The price includes a networking cocktail, the awards dinner, and Distinguished Lecture. Visit the IPR website to learn more about the 62nd IPR Annual Distinguished Lecture & Awards Gala.About the Institute for Public RelationsThe Institute for Public Relations is an independent, non-profit research foundation dedicated to fostering greater use of research and research-based knowledge in corporate communication and the public relations practice. IPR is dedicated to the science beneath the art of public relations. IPR provides timely insights and applied intelligence that professionals can put to immediate use. All research, including a weekly research letter, is available for free at instituteforpr.org.Media ContactBrittany Higginbotham Communications & Outreach ManagerInstitute for Public Relationsbrittany@instituteforpr.org352-392-0280

...